Thesis Statement

"Through my dissertation, I introduce a causally grounded, extensible, approach for rating AI models for robustness by detecting their sensitivity to input perturbations and protected attributes, quantifying this behavior, and translating it into user-understandable ordinal ratings (trust certificates). "

Abstract

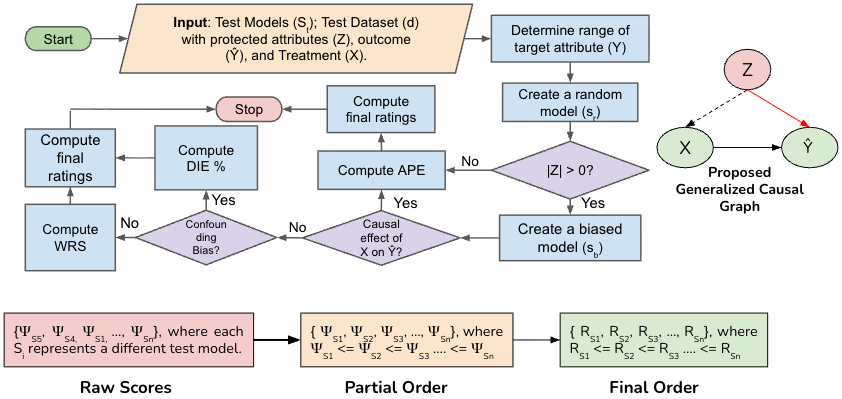

While AI models have become increasingly accessible through chatbots and diverse applications, their "black-box" nature and sensitivity to input perturbations create significant challenges for interpretability and trust. Existing correlation-based robustness metrics often fail to adequately explain model errors or isolate causal effects. To address this limitation, I propose a causally-grounded framework for rating AI models based on their robustness. This method evaluates robustness by quantifying statistical and confounding biases, as well as measuring the impact of perturbations across diverse AI tasks.

The framework produces raw scores that quantify model robustness and translated them into ratings, empowering developers and users to make informed decisions regarding model robustness. These ratings complement traditional explanation methods to provide a holistic view of model behavior. Additionally, the method aids in the assessment and construction of robust composite models by guiding the selection and combination of primitive models. User studies confirm that these ratings reduce the cognitive load for users comparing AI models in time-series forecasting tasks, and also foster trust in the construction of efficient, low-cost composite AI models.

Rating Workflow

From Predictions to Ratings